This article comes from our Partner Fabien Stepho from Object’ive and was previously written for medium.com

Bitcoin transactions have become very expensive since the end of the last year 2017, with fees rising to $55 as demand has increased considerably. So, I wanted to analyze what was happening in the bitcoin network.

This story describes how I proceed to analyze billions of bitcoin transactions.

The tools and frameworks I wanted to use for this, are all open source and include :

Bcoin — Javascript bitcoin library for node.js

Elasticsearch — RESTful, distributed search & analytics

Kibana — Tool to explore, visualize, and discover data

Docker — Open platform for developers and sysadmins to build, ship, and run distributed applications

Microsoft Azure — Cloud platform

Here are the following steps I came accross to accomplish my goal :

Step 1 — Installing the cloud infrastructure

Step 2 — Running and syncing a full bitcoin node with the complete blockchain data

Step 3— Extracting bitcoin data from the local blockchain

Step 4 — Indexing the data into Elasticsearch

Step 5 — Visualizing and analysing data

Step 1 — Installing and defining the cloud infrastructure

The first step was to install the platform. A virtual machine with at least 500GB of disk space is required for the need of blockchain data and elasticsearch index.

Here is an overview of the Azure cloud portal interface :

Docker is a must have for packaging and running isolated containers.

My docker compose definition consists mainly of the bitcoin node container, the elasticsearch container and the kibana container :

version: '2'

services:

bcoin:

build: .

container_name: bcoin

restart: unless-stopped

ports:

#-- Mainnet

- "8333:8333"

- "8332:8332" # RPC

#-- Testnet

#- "18333:18333"

#- "18332:18332" # RPC

environment:

BCOIN_CONFIG: /data/bcoin.conf

VIRTUAL_HOST: bcoin.yourdomain.org

VIRTUAL_PORT: 8332 # Mainnet

#VIRTUAL_PORT: 18332 # Testnest

networks:

- "bcoin"

volumes:

- data:/data

- ./secrets/bcoin.conf:/data/bcoin.conf

- ./app:/code/app

elasticsearch:

image: docker.elastic.co/elasticsearch/elasticsearch:6.0.0

container_name: elasticsearch

hostname: elasticsearch

environment:

- cluster.name=bitcoin-cluster

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms1400m -Xmx1400m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- esdata:/usr/share/elasticsearch/data

- ./elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml

ports:

- 9200:9200

networks:

- bcoin

kibana:

image: docker.elastic.co/kibana/kibana:6.0.0

container_name: kibana

hostname: kibana

volumes:

- ./kibana.yml:/usr/share/kibana/config/kibana.yml

ports:

- 5601:5601

networks:

- bcoin

head:

image: mobz/elasticsearch-head:5

container_name: head

ports:

- 9100:9100

networks:

- bcoin

networks:

bcoin:

external:

name: "bcoin"

volumes:

data:

esdata:

As you can see, the data are not hosted inside the containers, but outside on the vm host, via docker “volumes”feature. So the generated data are not lost when containers are restarted or deleted.

Docker images and containers are constructed via the commande : docker-compose up. You can see running containers on the host vm via the command : docker ps

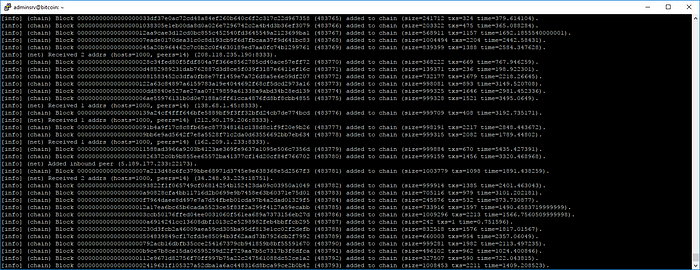

Step 2 — Running and syncing a full bitcoin node with the complete blockchain data

I choosed to run the bcoin open source library.

The project is hosted on github : https://github.com/bcoin-org/bcoin. I tried first with Bitcore library, but I encountered performance issues while syncing.

The syncing of the blockchain data was a bit long and lasted several days, so I decided to switch with Bcoin library on wich I can develop code written in node js (step 3 and step 4).

Step 3 — Extracting bitcoin data from the local blockchain

Thanks to the bcoin API, I writed some pieces of codes to retrieve bitcoin transaction data from the full running node.

The API function I used is client.getBlock(height). Once I extracted the data, I pushed it to Elasticsearch index.

'use strict';

const defaults = require('../lib/helpers').getDefaultClients();

const client = defaults.client;

const esIndexer = require('./es.js');

indexTxs();

async function indexTxs() {

/**

* Get Block by Height

*/

let blockHeight;

blockHeight=560000;

/**

curl $url/block/$blockHeight

* ---

bcoin cli block $blockHeight

*/

try {

for (var height = 0 ; height<blockHeight; height++){

var block = await client.getBlock(height);

console.log("Processing Block : " + height + " (" + block.txs.length +" txs)");

for (var i = 0; i< block.txs.length; i++){

var tx = block.txs[i];

// Queue the block heigth for processing

tx.blockheight = block.height;

tx.blockhash = block.hash;

tx.time = block.time;

var forceIndex = (height == blockHeight -1) && (i == block.txs.length -1);

const response = await esIndexer.indexItem(tx, forceIndex);

}

}

} catch (e) {

console.error(e);

}

}

Step 4 — Indexing the data into Elasticsearch

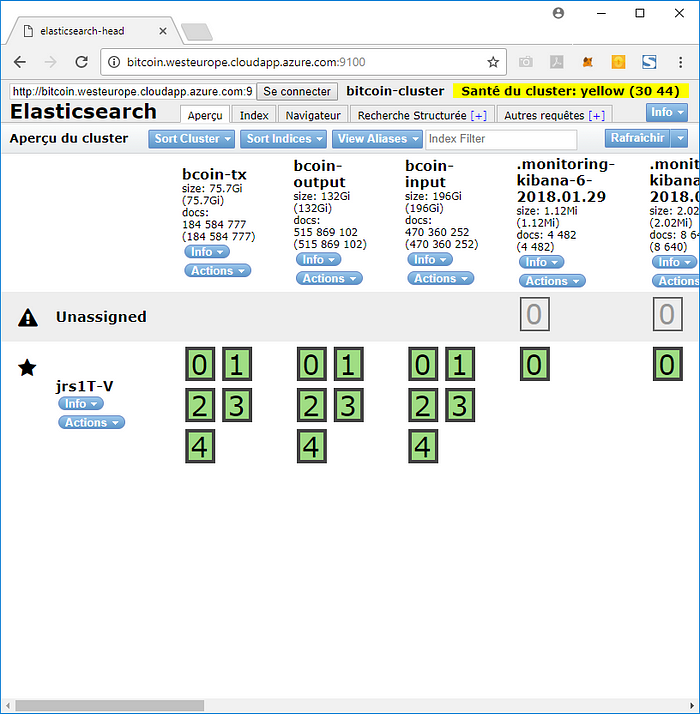

I created three indexes to handle the data. One for output transactions, one for input transactions and one for the main transactions. Each index contains fields like address, block height, block hash, fee, amount, coinbase, etc.

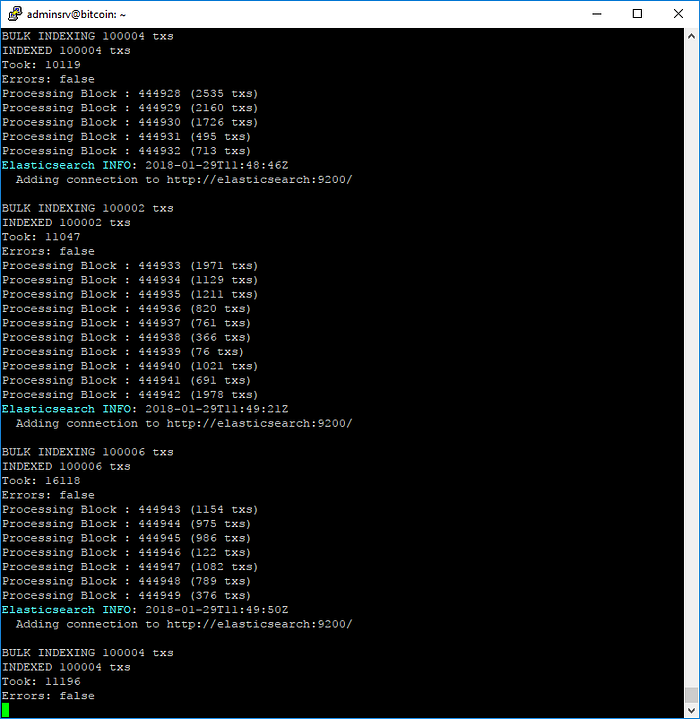

The code which push the data into Elasticsearch use the javascript elasticsearch API with his bulk indexing feature. The bulk API allows me to make multiple index requests in a single step. This is particularly useful since I need to index a lot of transactions, which can be queued up and indexed in batches of thousands (100 000 in my case).

Here is the code for indexing :

'use strict';

var exports = module.exports = {};

var elasticsearch = require('elasticsearch');

var async = require("async");

var body = [];

exports.indexItem = async function (item, forceIndex) {

console.log("Adding " + item.hash + " for bulk indexing")

// INDEXATION VOUT

item.outputs.forEach(function (vout) {

var toIndex = {

type: "output",

blockhash: item.blockhash,

blockheight: item.blockheight,

hash: item.hash,

date: new Date(item.time * 1000),

value: vout.value,

//script: vout.script,

address: vout.address

}

// action description

body.push({ index: { _index: 'bcoin-output', _type: 'output'} });

body.push(toIndex);

});

// INDEXATION VIN

item.inputs.forEach(function (vin) {

var toIndex = {

type: "input",

blockhash: item.blockhash,

blockheight: item.blockheight,

hash: item.hash,

date: new Date(item.time * 1000),

prevout_hash: vin.prevout.hash,

prevout_index: vin.prevout.index,

//script: vin.script,

witness: vin.witness,

sequence: vin.sequence,

address: vin.address

}

if (vin.coin) {

toIndex["utxo_version"] = vin.coin.version;

toIndex["utxo_height"] = vin.coin.height;

toIndex["utxo_value"] = vin.coin.value;

//toIndex["utxo_script"] = vin.coin.script;

toIndex["utxo_address"] = vin.coin.address;

toIndex["utxo_coinbase"] = vin.coin.coinbase;

}

// action description

body.push({ index: { _index: 'bcoin-input', _type: 'input'} });

body.push(toIndex);

});

// INDEXATION TX

var toIndex = {

type: "tx",

blockhash: item.blockhash,

blockheight: item.blockheight,

hash: item.hash,

date: new Date(item.time * 1000),

witnessHash: item.witnessHash,

fee: item.fee,

rate: item.rate,

mtime: item.mtime,

index: item.index,

version: item.version,

locktime: item.locktime,

//hex: item.hex

}

// action description

body.push({ index: { _index: 'bcoin-tx', _type: 'tx'} });

body.push(toIndex);

if (body.length>100000 || forceIndex){

var esClient = new elasticsearch.Client({

host: 'elasticsearch:9200',

log: 'info',

requestTimeout: 1000 * 60 * 60,

timeout: 1000 * 60 * 60,

keepAlive: false,

deadTimeout : 6000000,

maxRetries : 15

});

console.log("BULK INDEXING " + body.length + " txs" );

const resp = await esClient.bulk({

body

});

esClient.close();

console.log("INDEXED " + body.length + " txs" );

console.log("Took: " + resp.took);

console.log("Errors: " + resp.errors);

body = [];

}

}

Once runned, I waited several hours before I got 500 000 blocks totally indexed :

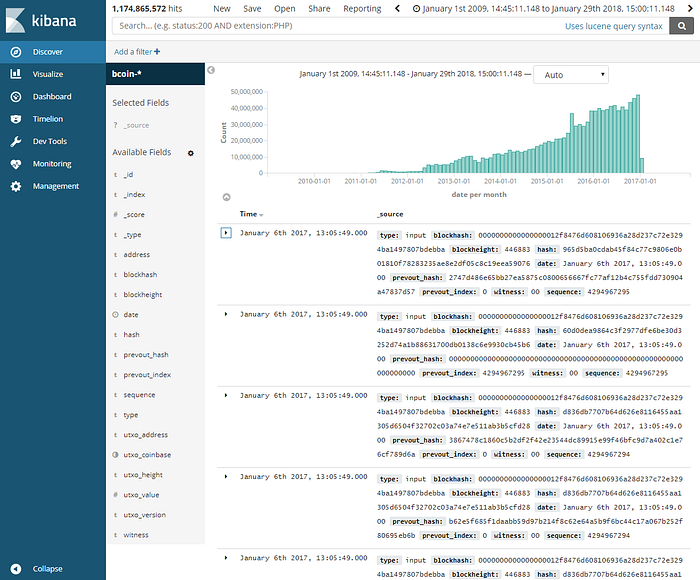

Step 5 — Visualizing and analysing data

Once the data was indexed, I plugged Kibana of the elastic stack, on the indexes :

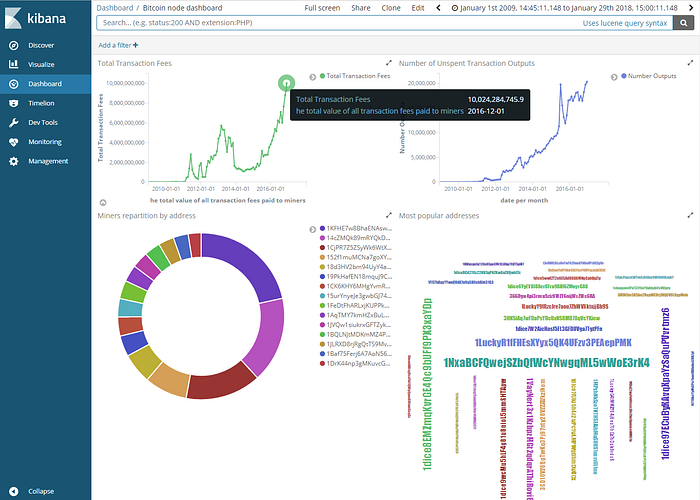

I defined several visualizations and created a simple dashboard with metrics like “Total transaction fees”, “Number of unspent transaction outputs”, “miners repartition”, “Most popular addresses”

Conclusion

With all these five steps done, I could now make things like :

- Transaction monitoring,

- Complex querying

- Exploring anomalies with machine learning,

- Analyzing transaction relationships with Graph,

My next story will be on these use cases, stay tuned !

Note : The source code is freely available on my github repository : https://github.com/fstepho/bcoin-es

You can follow Fabien Stepho on his github for cryptocurrency development ideas